Machine Learning project lifecycle - from Scoping to Proof Of Concept

At Contentsquare, we explore the power of Machine Learning (ML) to solve problems. The domain application range is wide and can be related to understanding user behavior, detecting frustrating errors, computing product performance, or many others. At any point in time, the data science team is designing in parallel dozens of models, all with the potential to go into production.

So, how do we manage to have common building blocks and ways of working across projects? How do we keep track, stage after stage, of the feasibility of our solutions from both a technical and business perspective? We wanted a blueprint, something agnostic to the task at hand or the technology specifics. Drawing from our respective experiences, we ended up with a recurrent pattern, something we now call our “typical ML project lifecycle”.

Global picture: What is the typical lifecycle of a ML project?

As a company with over thousands of clients and so many disruptive features to add to our product, we need to make sure that the selected project is indeed in line with the company vision.

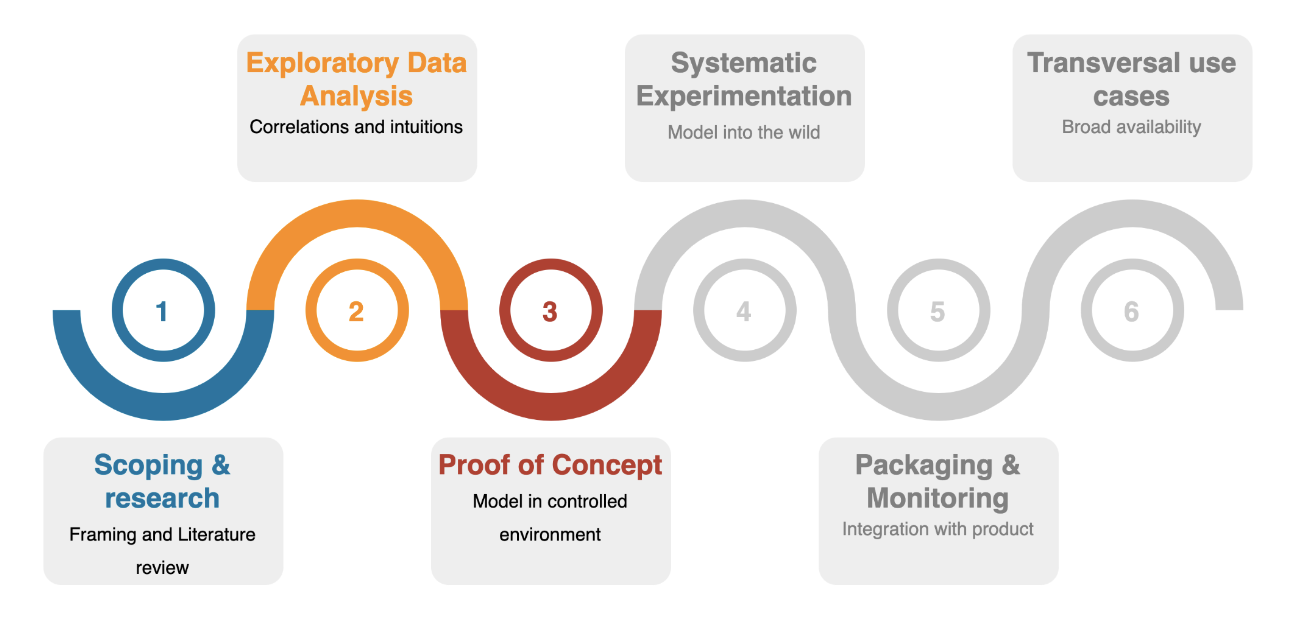

The lifecycle consists of a set of development and maturity phases, that can be split into 6 parts:

- Scoping & Research

- Exploratory Data Analysis

- Proof of Concept

- Systematic experimentation

- Packaging & Monitoring

- Transversal use-cases

Throughout the journey of a company, a lot of ideas emerge; are explored but then only a few of them are being shipped into production. Reprioritization, lack of resources, lack of data or low data quality could all yield the project being put aside. For this article, we only concentrate on the first three stages, “Scoping & research”, “Exploratory data analysis” (EDA), and “Proof of concept” (PoC) - basically everything that is exclusively related to Data Scientist’s / Machine Learning Engineer’s exploration tasks.

1. Scoping & Research

The main goal of this first stage is to determine the project technical and business feasibility. This is how we avoid repeating previous mistakes and reinventing the wheel.

- Align with the company and its product vision

Don’t do projects just for fun!

A company, whatever their size and maturity, has a vision. Our mission - at this stage - is to validate that our project contributes to it. Indeed, we select projects to solve either a need or an opportunity identified by the product. We naturally then fit into what we call a “product line”, where we can refer to stakeholders to get additional inputs and finally - together - come up with a tentative strategy. It can happen that what we are willing to build can be integrated on multiple products. If so, there is an arbitrage to be made based on highest impact, available resources, etc.

- Identify and understand existing related projects within the company

Avoid dead ends and redundancy!

Before jumping into our project, we need to verify whether there has been some work related to it within the company, either stopped or ongoing. If a past related project has been stopped, we investigate the reasons for it and make sure there have been some key advancements in technology/data sources/tools/resources during the elapsed time. If it is still ongoing, we make sure we are not just duplicating the work.

- Conduct a literature review

Don’t try to reinvent the wheel!

The first question we ask ourselves at this stage is: “Can I refer back to existing materials in the literature?” and we answer it by looking at some related tasks in a field or domain similar to the company and its potential clients. The literature should provide key insights on some stages of the pipeline (data sources, feature creation, modeling, practical use-cases, etc.) and give an intuition of the results we might be able to achieve. Overall, the literature review guides us to use approaches that have been proved to work in similar contexts.

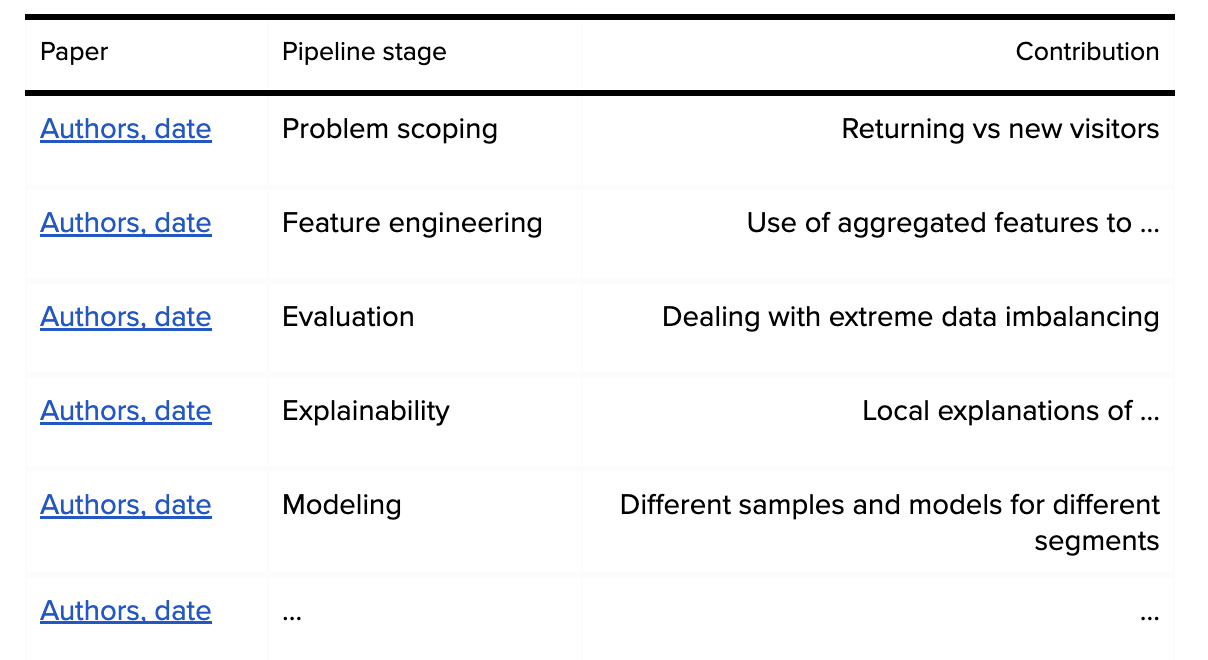

To ease communication with other stakeholders, we build a literature review document where each research paper includes a short paragraph explaining what this resource is bringing to the table and why it is relevant for our use case. We also decided to compress the information in what we call a “Useful literature digest”, our point of reference:

- Assess sources and types of data to use

Make sure you have all the tools at hand!

Now that we have a sense of what is used in the literature, we should know what is actually available in the company. For instance, we will be looking for the types of data to use, the way they are stored and thus can be queried, the specificities of some of them (available for all VS a portion of our clients, GDPR constraints, data retention, etc.), the data quality (and reliability), etc. We spend quite some time doing so because we all know that data is key and we don’t want to miss some crucial entries.

- Rescope and communicate

Be realistically optimistic!

At this point, we have all the tools to build a high-level roadmap of achievable short to mid term tasks. It is the perfect time to communicate to the other stakeholders the inputs/outputs of our model, potential challenges we might face during exploration and prototyping, and a minimal performance we think we can achieve.

2. Exploratory Data Analysis

The second phase of this ML lifecycle is critical as it helps build intuitions on the data at hand, spot recurring patterns and overall ensure that the model is likely to achieve its goals.

- Set up the data pipeline

Freeze your dataset!

The goal is to build the extraction pipeline and have data clearly identified at our disposal to perform some exploration. We need to settle on a strategy of fetching: whether at a day/month level, full or sampled data points. It is also the right moment to frame the analysis to one or multiple clients. Will we apply it to all customers or only a selected few? Do we need to check behavioral data on desktop, on mobile or both? All those constraints are either design choices or simplification measures and need to be made explicit for every stakeholder to be in line with the exploration scope.

- Perform feature selection and engineering

Shape your data!

In the first phase of scoping and research, we have spotted features that are used in the literature, as well as the ones that are available in our data sources. We use those findings to come up with a first selected set of features. Exploration allows us to get a feeling of how reliable the data is. Indeed, data extracted from production systems are not perfect: we can spot mistakes, exceptions, edge-cases, missing values, deprecated fields, etc. We need to master our data since the quality of output is determined by the quality of the input (garbage in, garbage out). Also, features are available in raw formats. While, in some use cases, working directly with raw data is possible, in others, we would need to perform some feature engineering to build meaningful features. These types of aggregation are to be made during this phase.

- Statistics and visualization

Graphs are worth a thousand numbers!

Now that we have a fixed dataset, we analyze more in depth feature correlation, distribution and importance. The analysis is twofold:

- Quantitative: any analysis based on the whole dataset like features distributions, outlier detection, category counts, unbalancing in your dataset, etc.

- Qualitative: we hand pick a small subset and assess features quality, challenge the business meaning of target labels, verify some human assumptions, etc.

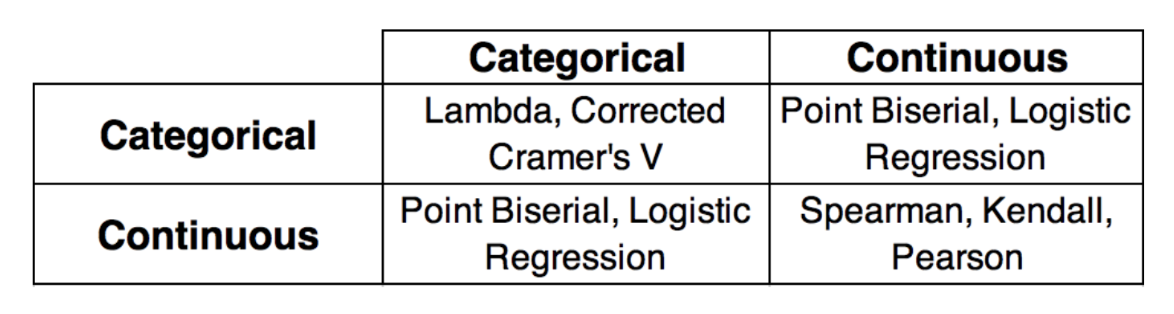

Performing correlation analysis is critical since it can have a significant impact on your model. We rely on the table below to compute correlation metrics based on whether we compare two categorical variables, two continuous variables or a mix of the two.

Which correlation metric should you use?

Source: An overview of correlation measures between categorical and continuous variables

- Define the baseline

Don’t get into complex models just yet!

To quote Albert Einstein, “Everything should be made as simple as possible, but not simpler”. Indeed, it can happen that only with a very simple set of rules, we achieve acceptable performance. If so, maybe dedicating resources to build complex models does not make sense anymore, and there is an arbitrage to be made with all the stakeholders. However, if after all we estimate - based on our thorough analysis - that there is an improvement margin to aim at, we build a simple model to have a baseline performance. It will be a reference point to put into perspective the results of more complex models, to be implemented and evaluated in the next phase. So choose your baseline wisely!

3. Proof of Concept

The third phase of this ML lifecycle is when we prototype a solution and iterate over it. The aim is to mature the project in terms of different sets of training features, models and evaluation metrics. At each iteration, we improve our modeling, always assessing the tradeoff between accuracy and cost.

- Set up the extended data pipeline

Bigger, more representative!

Until now, we had scoped our dataset to fit the needs of exploration. It is time to enlarge this dataset to broader time ranges, multiple clients and so on. The idea here is to make sure that the performance we measure and on which we rely is consistent across different sets. Also, we need to think about the fetching strategy to adapt to potential challenges such as data imbalance, missing labels, seasonality bias, etc.

- Perform data pre-processing

Get your features ready!

Some features we have selected in the previous step might need processing such as cleaning, aggregation, etc. As the newly built dataset has a bigger size, it might also make sense to parallelize this processing strategy. There are multiple tools to speed up the computation and - depending on the use case - we can either rely on a Multi-threading or a Multi-processing strategy. Learn more about the difference between the two in our previous article.

- Model and set up the evaluation metrics

Let’s get our hands dirty!

This step consists in finally getting quantitative results to address the task at hand. But before that, we list all possible solutions and prioritize them based on the following criteria:

- complexity: we iterate from simplest to most complex ideas and implementations;

- explainability: we prefer ideas that are explainable to a large audience and can be shipped to the product as intermediate checkpoints;

- accuracy vs. cost: we evaluate the tradeoff between expected performance and cost of integration/serving.

We also need to settle on the right evaluation metrics for the problem. Is there a business need oriented towards precision or recall? Is the data imbalanced? All those questions should guide the decision to choose one or multiple metrics that indeed reflect what we are trying to perform.

- Predict and assess performance

Don’t forget the biases!

We made it till here, everything is set up. Let’s roll everything out and see where the model stands. First thing we should verify is that our measured quantitative performance is above the initial baseline of the EDA step. Then, we should qualitatively assess the performance using some explainability approaches to get the feature importance, understand the decision threshold, etc. Finally, we should consider the potential biases we might have introduced and correct them, if any. We know that biases can be in the data itself, the models and the evaluation. We thus audit the full pipeline and rely on what is explained in this article.

The above-mentioned steps are repeated for each iteration of the PoC. A new iteration can differ from the previous ones in terms of dataset, model, or business specs.

Conclusion

We presented a blueprint to consistently handle ML projects within a company, from the standpoint of a Data Scientist. It has been successfully applied within the Contentsquare Data Science team and has considerably helped share knowledge, best practices, common data pipelines and code overall. To sum up, we found that dividing ML workflow into three phases works well for us. During the Scoping & Research phase, we first assess the technical and business feasibility; then there is the EDA phase where we build intuitions on our data and finally; the PoC phase where we prototype and iterate over solutions. Our work is sequential, e.g we never try to build a first PoC before making sure the product vision is aligned, and having explored the data at hand.

Now that you have a better idea of how we iterate over technical solutions to address business needs, join the adventure!

Many thanks to Tim Carry, Mohammad Reza Loghmani, Daria Stefic, Philipe Moura and Amine Baatout for their reviews!