How we reshaped our Test Pyramid

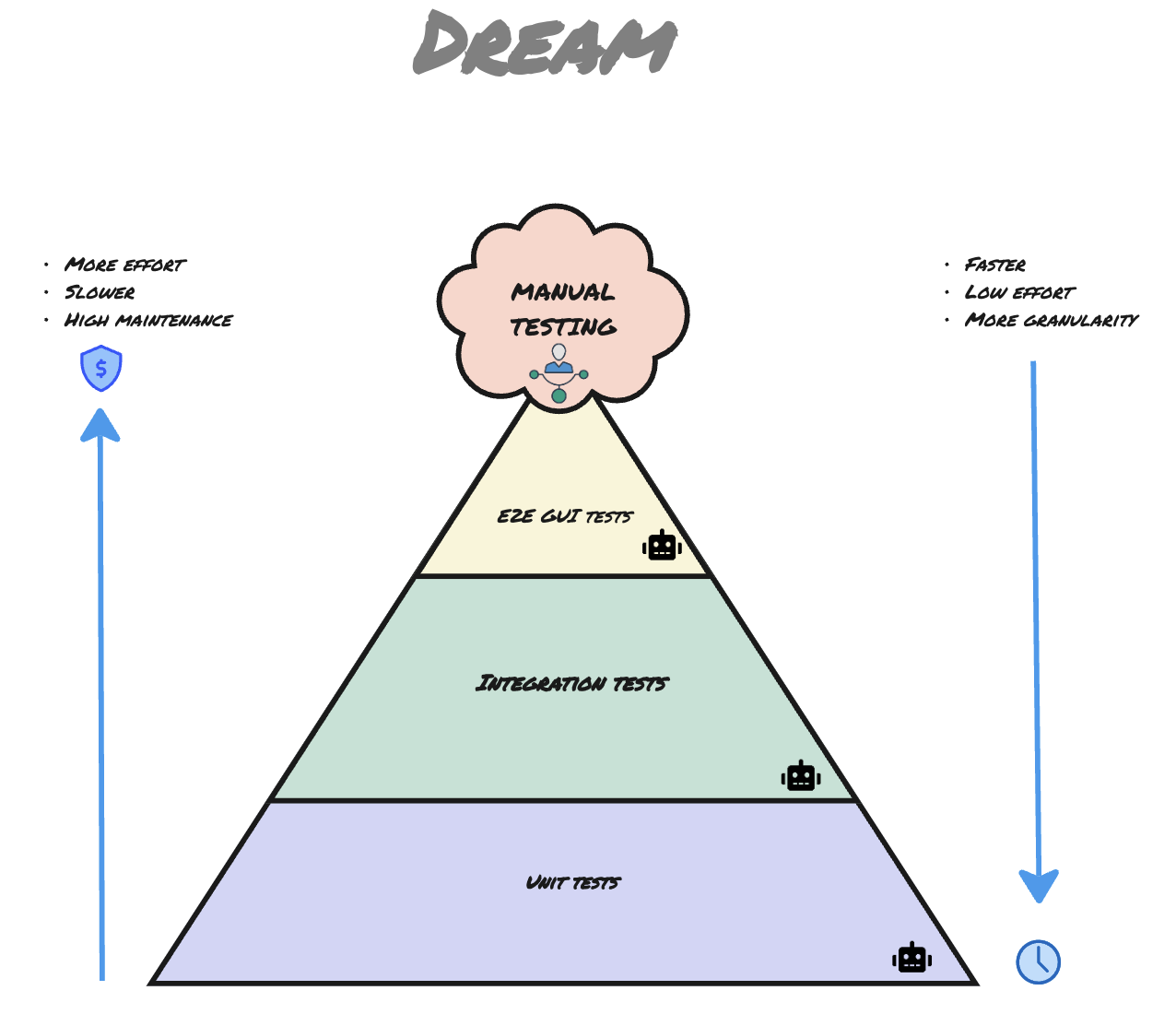

Since ancient times, pyramids have stood as iconic structures, symbolizing strength and stability. As software engineers, we have our own: the Test Pyramid. It acts as a framework for organizing and prioritizing our testing efforts, with a wide base of micro-level tests gradually narrowing to macro-level tests as the scope increases. However, like any structure, the Test Pyramid can lose its shape over time.

Deviating from a proper pyramid structure in testing can lead to imbalanced coverage, increased costs, longer feedback loops, and difficulties in pinpointing and resolving issues. A few years ago, Contentsquare faced this exact challenge.

But fear not! The journey to recreate a proper Test Pyramid is a worthwhile one, demanding patience, persistence, and a commitment to quality. Join me as we embark on a journey to restore balance and build a stronger foundation for our software testing efforts.

Test Pyramid - Striving for the Ideal in Real Life

Imagine this: your software engineering team is racing against time to deliver a crucial new feature. However, every code change triggers a painfully slow end-to-end (E2E) test suite that takes hours to complete. To make matters worse, these tests are unstable, generating false positives that hinder the team’s progress hence slowing the business delivery.

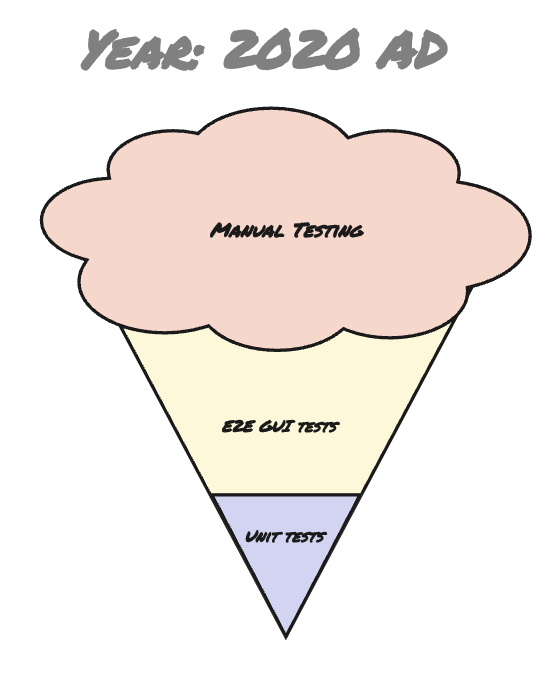

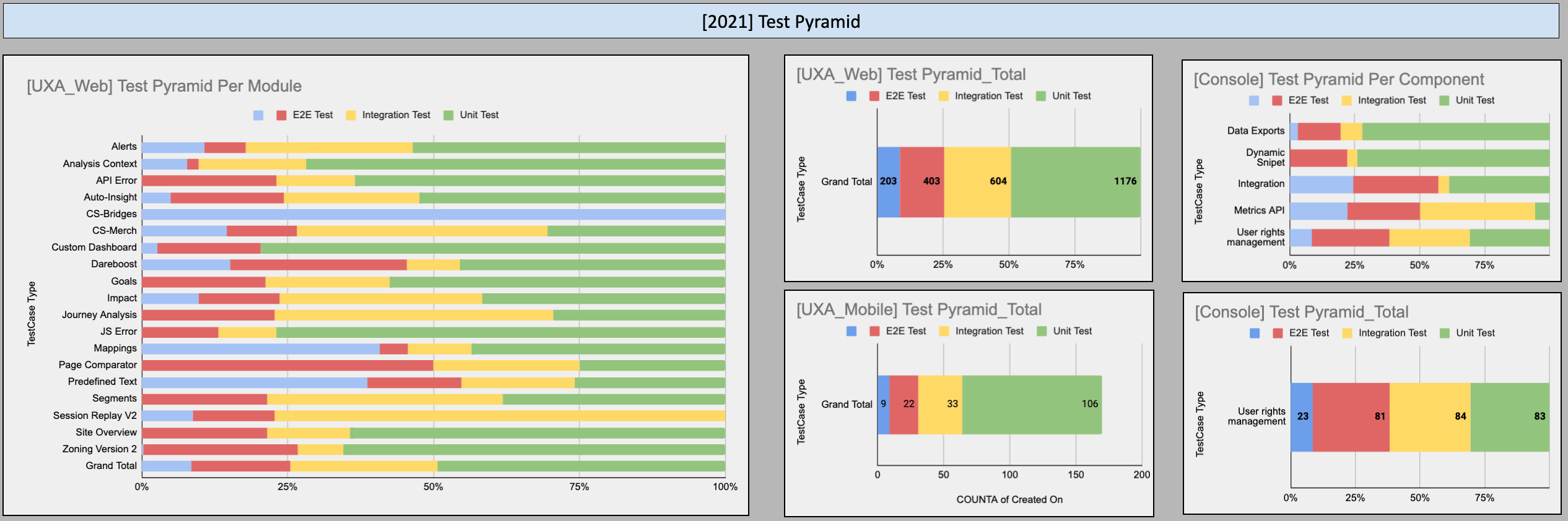

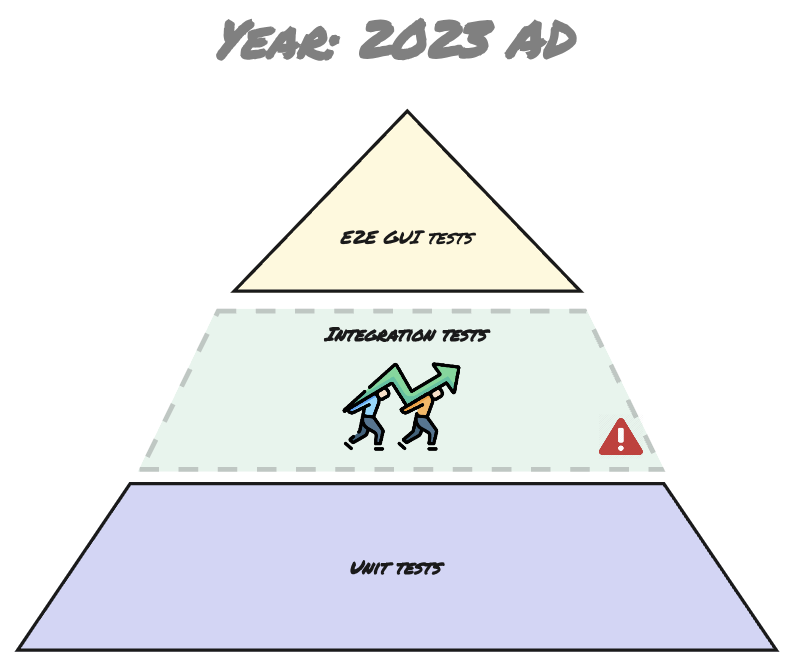

That was the predicament we faced in 2020 at Contentsquare with a comparatively newborn Quality Team. Our Test Pyramid was completely misshapen, with an overloaded E2E suite consisting of approximately 800 test cases and a scarcity of unit and integration tests. This imbalance led to a host of challenges, including heavy workloads to maintain flaky E2E tests, sluggish development cycles due to blocked branch building caused by those tests, and a high reliance on manual testing. As a result, both developers and Quality Engineers (QEs) grew increasingly frustrated.

To address this issue, we launched an initiative to reshape our Test Pyramid, requiring collaboration among developers, QEs, and other stakeholders. Achieving a balanced Test Pyramid isn’t easy. It’s akin to embarking on a diet – a conscious effort to consume healthy food and exercise regularly. You won’t see results overnight, but over time, you’ll start to see the benefits of your efforts.

Itinerary

Departure: Clearly define Unit Test, Integration Test, and E2E Test

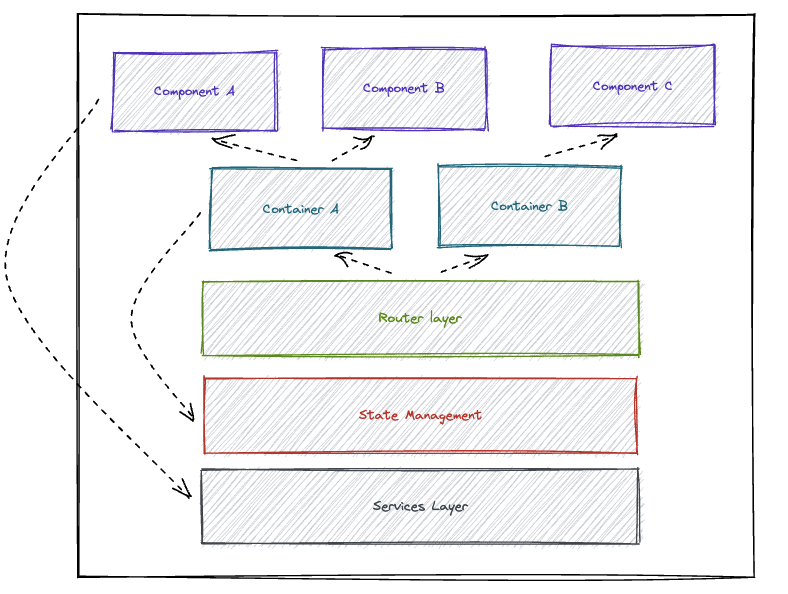

As a reminder, Contentsquare adopted the Micro Frontend (MFE) architecture when our monolithic codebase became too large. This architecture requires a deeper modularization and cleaner boundaries:

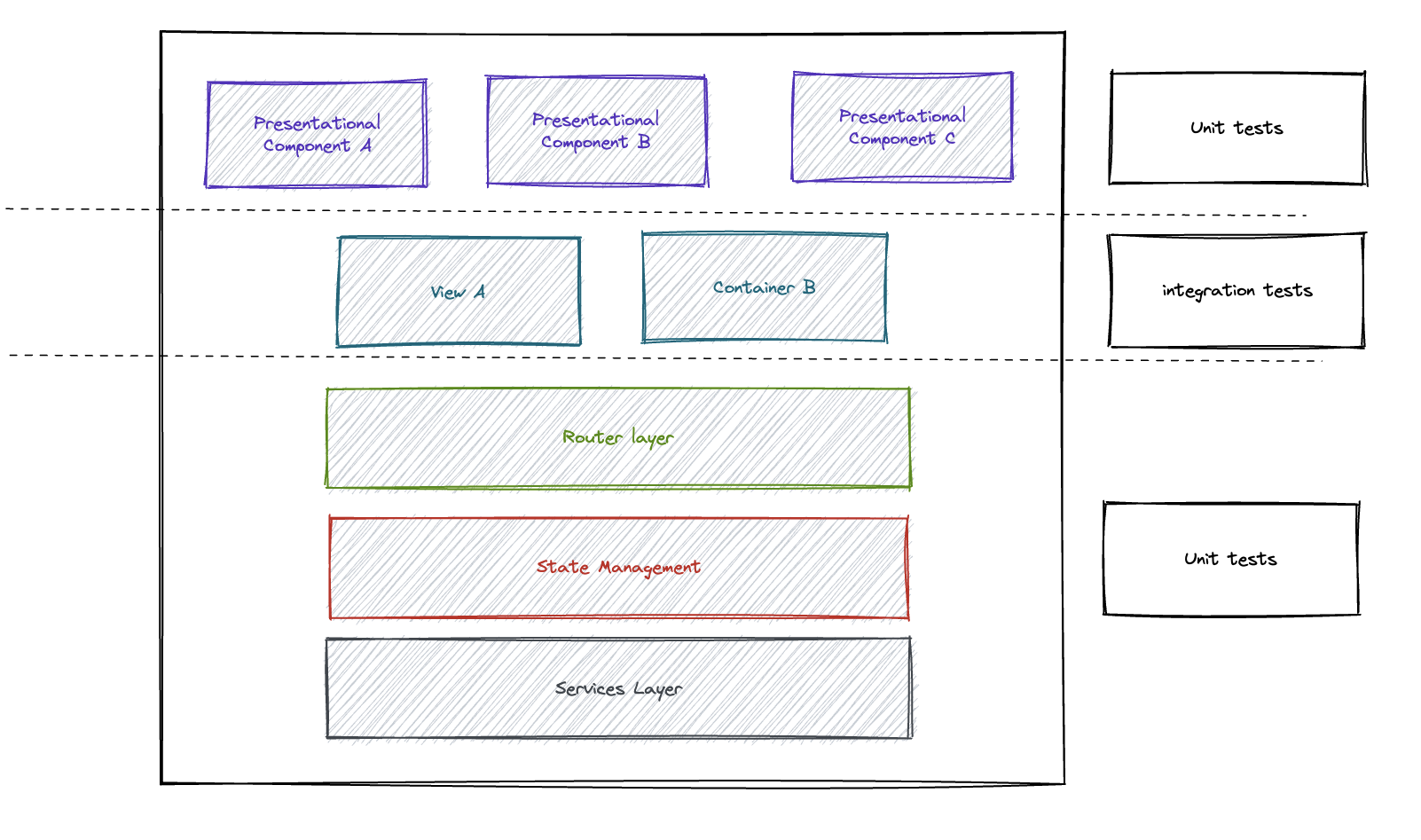

As part of our initiative, we began by clearly defining what constitutes a Unit Test, Integration Test, and E2E Test:

Under the MFE architecture, integration tests can be designed using unit test techniques, but they require a greater number of mocked data/services/components compared to unit tests. Additionally, instead of mounting/compiling individual components, we mount/compile containers (which house the components under test) or the entire MFE if it’s a small-sized one.

For example, in a MFE, an integration test might evaluate the behavior of a group of components working together to implement a specific feature, such as a search form or a user profile page. The components would be mounted using @vue/test-utils (in Vue-based MFE) or TestBed (in Angular MFE), and all necessary dependencies required for the feature’s correct functioning would be included in the test. The test would simulate user interaction, like entering search terms or clicking buttons, and verify that the application responds correctly.

Integration Test With Angular + NGRX + Jest Example

import { TestBed, ComponentFixture, waitForAsync, tick, fakeAsync, flush } from '@angular/core/testing';import { By } from '@angular/platform-browser';import { Store, StoreModule } from '@ngrx/store';import { HomeComponent } from './home.component';import { TodoListComponent } from '../todo-list/todo-list.component';import { TodoDialogComponent } from '../todo-dialog/todo-dialog.component';import { todosReducer } from '../state/todos/todo.reducer';import { TodoService } from '../services/todo.service';import { EffectsModule } from '@ngrx/effects';import { TodoEffects } from '../state/todos/todo.effect';import { MatDialog, MatDialogModule, MatDialogRef } from '@angular/material/dialog';import { of } from 'rxjs';import { MatTabsModule } from '@angular/material/tabs';import { Todo } from '../models/todo';import { MatCardModule } from '@angular/material/card';import { NoopAnimationsModule } from '@angular/platform-browser/animations';import { FormBuilder } from '@angular/forms';

const todoItems: Todo[] = [ { id: 0, title: 'Grocery shopping', description: '1. A pack of carrots\n2. Eggs', completed: false }, { id: 1, title: 'Integration Test', description: '10 tests', completed: false }, { id: 2, title: 'Pick up kid from school', description: '', completed: true }];

describe('AppComponent Integration Test', () => { let component: HomeComponent; let fixture: ComponentFixture<HomeComponent>; let todosService: TodoService; let store: Store;

// Create a partial stub for the TodoService with mocked methods let todosServiceStub: Partial<TodoService> = { getTodos: jest.fn().mockReturnValue(of(todoItems)), addTodo: jest.fn(), updateTodo: jest.fn().mockImplementation((todo: Todo) => { // Clone the original todoItems array const updatedTodos = todoItems.slice(); // Find the index of the todo item to be updated const index = updatedTodos.findIndex(item => item.id === todo.id); if (~index) { // If the item is found, update it with the new data updatedTodos[index] = todo; } // Return the updated todos as an observable return of(updatedTodos); }), deleteTodo: jest.fn().mockImplementation((id: number) => { // Filter out the todo item with the specified id and return the remaining items as an observable return of(todoItems.filter((todo) => todo.id !== id)); }) };

// Create a partial stub for the MatDialogRef with a close method const matDialogRefStub: Partial<MatDialogRef<any>> = { close: () => {}, };

beforeEach(async () => { TestBed.configureTestingModule({ declarations: [ HomeComponent, TodoListComponent, TodoDialogComponent, ], imports: [ StoreModule.forRoot({ todos: todosReducer, }), EffectsModule.forRoot([ TodoEffects ]), MatDialogModule, MatTabsModule, MatCardModule, NoopAnimationsModule, ], providers: [ { provide: TodoService, useValue: todosServiceStub }, { provide: MatDialog, useClass: MatDialog }, { provide: MatDialogRef, useValue: matDialogRefStub }, { provide: FormBuilder, useClass: FormBuilder } ], }).compileComponents();

// Inject the TodoService and Store todosService = TestBed.inject(TodoService); store = TestBed.inject(Store);

// Create the component fixture fixture = TestBed.createComponent(HomeComponent); component = fixture.debugElement.componentInstance;

// Trigger change detection to initialize the component fixture.detectChanges(); });

afterEach(() => { // Clear all mock functions after each test jest.clearAllMocks();

// Reset the testing module TestBed.resetTestingModule(); });

it('should be able to complete an incomplete todo item', fakeAsync(() => { // Get the first incomplete todo item in the DOM let firstIncompletedTodoItem = fixture.debugElement.query(By.css('mat-card'));

// Expect the first incomplete todo item to contain the title of the first sample todo item expect(firstIncompletedTodoItem.nativeElement.textContent).toContain(todoItems[0].title);

// Get the complete button of the first incomplete todo item const completeButton = firstIncompletedTodoItem .query(By.css('mat-card-actions')) .queryAll(By.css('button'))[2];

// Simulate a click event on the complete button completeButton.nativeElement.click();

// Trigger change detection fixture.detectChanges();

// Get the first incomplete todo item in the DOM after completion firstIncompletedTodoItem = fixture.debugElement.query(By.css('mat-card'));

// Expect the first incomplete todo item not to contain the title of the first sample todo item anymore expect(firstIncompletedTodoItem.nativeElement.textContent).not.toContain(todoItems[0].title);

// Verify that the item has been moved to the Completed Tab

// Get the completed tab in the DOM const completedTab = fixture.debugElement.queryAll(By.css('.mdc-tab'))[1];

// Simulate a click event on the completed tab completedTab.nativeElement.click();

// Trigger change detection fixture.detectChanges();

// Simulate the passage of time setTimeout(() => {}, 0);

// Flush any pending asynchronous tasks flush();

// Get the first completed todo item in the DOM const firstCompletedTodoItem = fixture.debugElement.queryAll(By.css('mat-card'))[1];

// Expect the first completed todo item to contain the title of the first sample todo item expect(firstCompletedTodoItem.nativeElement.textContent).toContain(todoItems[0].title); }));})Integration Test With Vue3 + Pinia + Jest Example

import { flushPromises, mount, VueWrapper } from '@vue/test-utils'import TodoApp from '@/components/TodoApp.vue'import { createTestingPinia } from '@pinia/testing'import type { Todo } from '@/models/todo'import { useTodosStore } from '@/stores/store'import { getTodos, addTodo } from '@/api/todo-service'

// Mock the todo-service modulejest.mock('@/api/todo-service');

const todoItems: Todo[] = [ { id: 0, title: 'Grocery shopping', description: '1. A pack of carrots\n2. Eggs', completed: false }, { id: 1, title: 'Integration Test', description: '10 tests', completed: false }, { id: 2, title: 'Pick up kid from school', description: '', completed: true }];

const newItem: Todo = { id: 4, title: 'Piano', description: 'Practice Fur Elise for 30 minutes', completed: false};

describe('TodoApp.vue', () => { let wrapper: VueWrapper<unknown, any>; let store: any;

beforeEach(async () => { // Mock the getTodos function to resolve with the mocked todo items (getTodos as jest.Mock).mockResolvedValue(todoItems.slice());

// Mount the TodoApp component with the Pinia testing plugin wrapper = mount(TodoApp, { global: { plugins: [ createTestingPinia({ stubActions: false }) ] } });

// Get the Pinia store instance store = useTodosStore();

// Wait for promises to flush before each test flushPromises(); });

afterEach(() => { // Clear all mock calls after each test jest.clearAllMocks(); });

it('should render component without errors', () => { expect(wrapper.exists()).toBe(true); });

it('should display Todo list', () => { const todoList = wrapper.findComponent({ name: 'TodoList' }); expect(todoList.exists()).toBe(true);

const items = wrapper.findAll('.item'); expect(items.length).toBe(3);

const completedTodos = items.filter((item) => item.attributes('completed') === 'true'); expect(completedTodos.length).toBe(1);

const incompletedTodos = items.filter((item) => item.attributes('completed') === 'false'); expect(incompletedTodos.length).toBe(2); });

// Test adding a new todo item it('should be able to add a new todo item', async () => { let items = wrapper.findAll('.item'); expect(items.length).toBe(3);

// Mock the addTodo function to resolve with the new todo item (addTodo as jest.Mock) = jest.fn().mockImplementation(() => { return Promise.resolve(newItem); });

const todoForm = wrapper.findComponent({ name: 'TodoForm' });

await todoForm.find('[qa-id="title"]').setValue(newItem.title); await todoForm.find('[qa-id="description"]').setValue(newItem.description); await todoForm.find('[qa-id="add-button"]').trigger('submit');

// Wait for promises to flush after adding the todo item flushPromises();

items = wrapper.findAll('.item'); expect(items.length).toBe(4); });

// Test toggling a todo item it('should be able to toggle a todo item', async () => { let items = wrapper.findAll('.item'); expect(items.length).toBe(3);

let completedTodos = items.filter((item) => item.attributes('completed') === 'true'); expect(completedTodos.length).toBe(1);

let incompletedTodos = items.filter((item) => item.attributes('completed') === 'false'); expect(incompletedTodos.length).toBe(2);

await items[0].find('[qa-id="toggle-button"]').trigger('click');

// Wait for promises to flush after toggling the todo item flushPromises();

completedTodos = items.filter((item) => item.attributes('completed') === 'true'); expect(completedTodos.length).toBe(2);

incompletedTodos = items.filter((item) => item.attributes('completed') === 'false'); expect(incompletedTodos.length).toBe(1); });

it('should able to edit a todo item', async () => { let firstTodoItem = wrapper.find('.item'); let title = () => firstTodoItem.find('.todo-title').text(); expect(title()).toBe(todoItems[0].title);

await firstTodoItem.find('[qa-id="edit-button"]').trigger('click'); flushPromises();

const newTitle = 'New title'; await wrapper.find('[qa-id="edit-title"]').setValue(newTitle); await wrapper.find('[qa-id="edit-save-button"]').trigger('click'); flushPromises(); expect(title()).toBe(newTitle); });

it('should able to delete a todo item', async () => { let items = wrapper.findAll('.item'); expect(items.length).toBe(3);

await items[0].find('[qa-id="delete-button"]').trigger('click'); flushPromises();

items = wrapper.findAll('.item'); expect(items.length).toBe(2); });})Stop 1: Theoretical Exercise of Test Pyramid

In parallel with the definition process, our Quality Engineers (QEs) closely collaborated with developers to scrutinize every test case and classify them into the appropriate layer of our Test Pyramid. This exercise helped us identify the areas where we needed to focus our testing efforts.

Stop 2: Prioritise test cases

Next, we prioritized our test cases based on their significance and impact. Our QEs carefully evaluated each test case, considering factors such as critical functionality, potential risks, and business requirements. Through this assessment, we categorized the test cases into Critical, High, Medium, or Low priority, allowing us to focus on migrating or implementing the most crucial ones first. This enabled us to determine which test cases were most important to migrate or implement (if not implemented).

Stop 3: Training the team

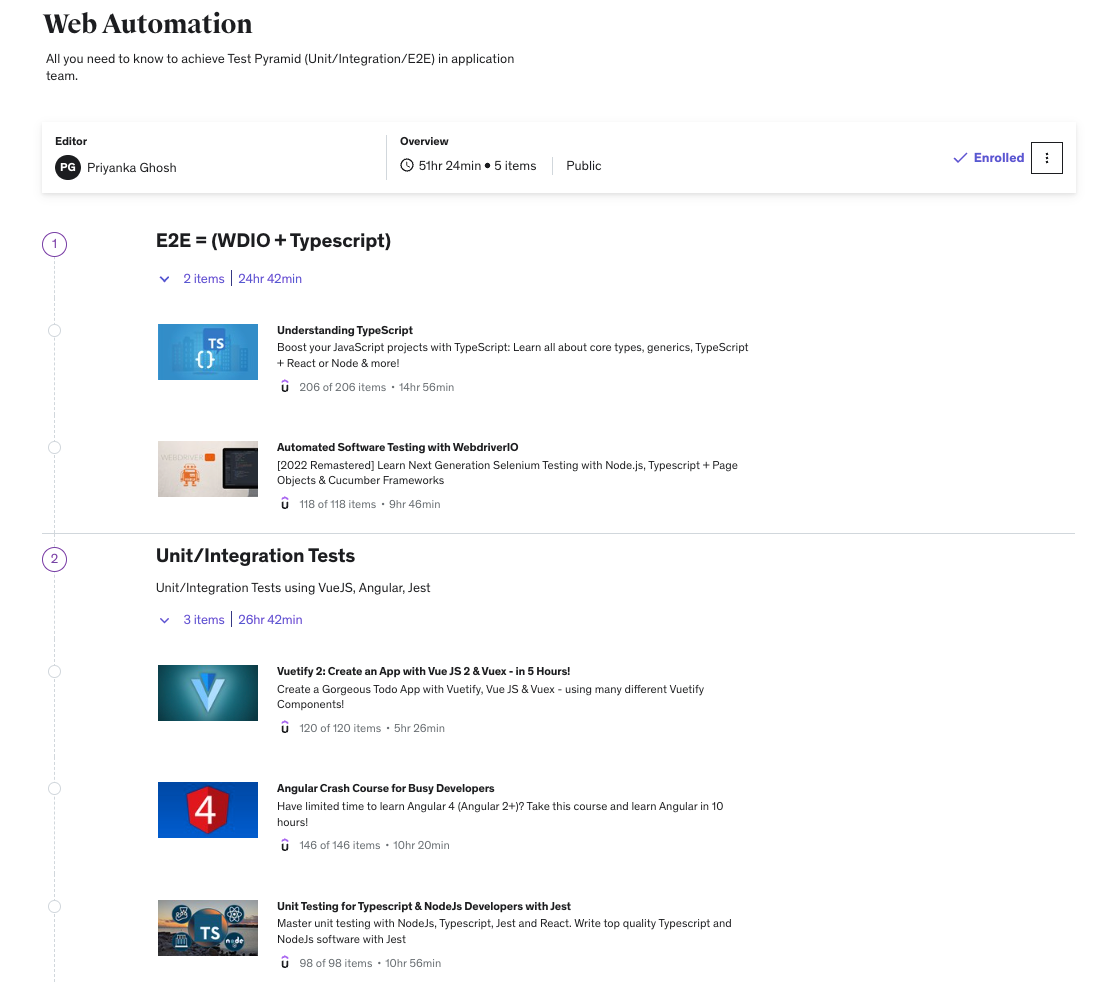

That was just the beginning. We recognized that our QEs needed a new skill set to write and execute integration tests effectively. While E2E tests are relatively straightforward for QEs to create be they all have the required skills, integration tests demand knowledge of web development frameworks (Vue/Angular/Svelte) and proficiency with tools like Jest to create test doubles. To equip our QEs with the necessary skills, we organized numerous hands-on training sessions. During these sessions, our QEs learned the intricacies of various web development frameworks, including Angular and Vue. They discovered how to utilize these frameworks to write clean and efficient code. Additionally, they were taught best practices for using Jest to create effective test doubles, enabling them to test their code more efficiently.

The training wasn’t limited to QEs alone. The Frontend core team provided invaluable support throughout the process, answering questions, providing guidance, and helping QEs troubleshoot any issues they encountered. Their expertise proved instrumental in helping our QEs quickly grasp these new skills.

Overall, the training sessions were a resounding success. Our QEs now possess the necessary skills to write and execute integration tests. We are excited to build upon the foundation we’ve established by constructing a learning path for QEs to train themselves on platforms like Udemy.

Stop 4: Further struggles

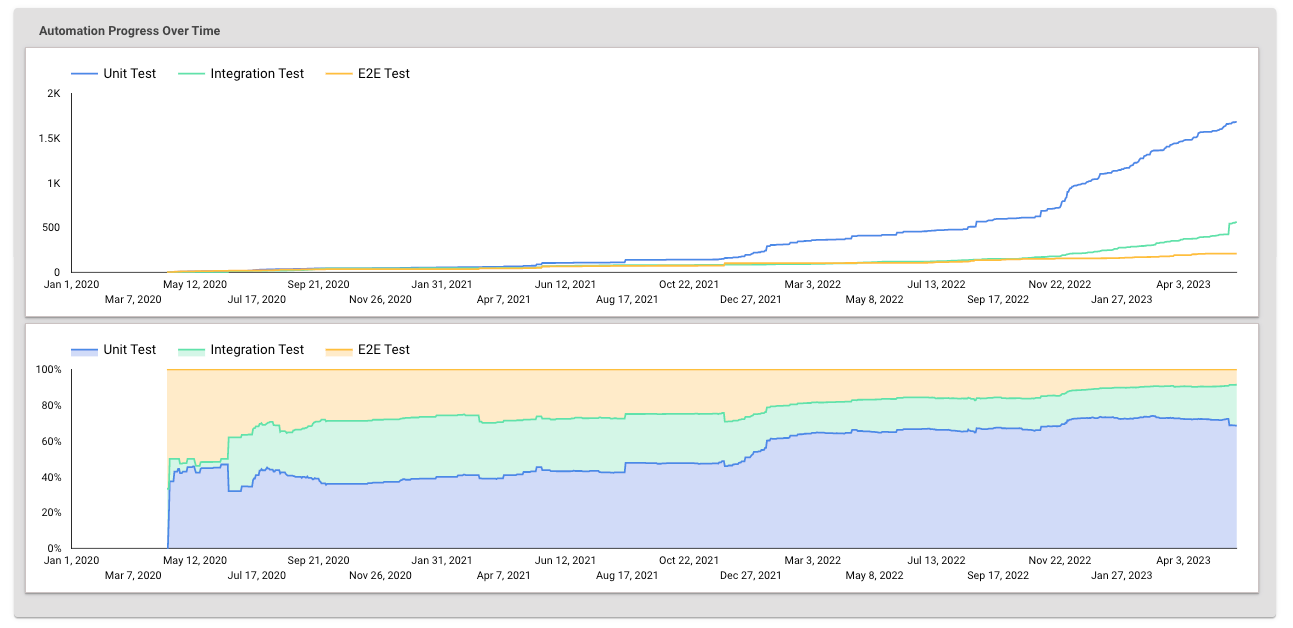

Our journey to recreate the test pyramid was not without its challenges. After a year of implementing the initiative, we eagerly awaited the results, hoping to see a significant increase in integration tests. While our developers and Quality Engineers (QEs) successfully migrated a considerable number of end-to-end (E2E) tests to unit tests, the number of integration tests fell short of our expectations.

Upon closer examination, we discovered that one of the major hurdles was the creation of mocks, or test doubles, for integrations. Understanding the intricate application architecture and data flow proved to be a challenge, leading to less effective and efficient outcomes.

Destination

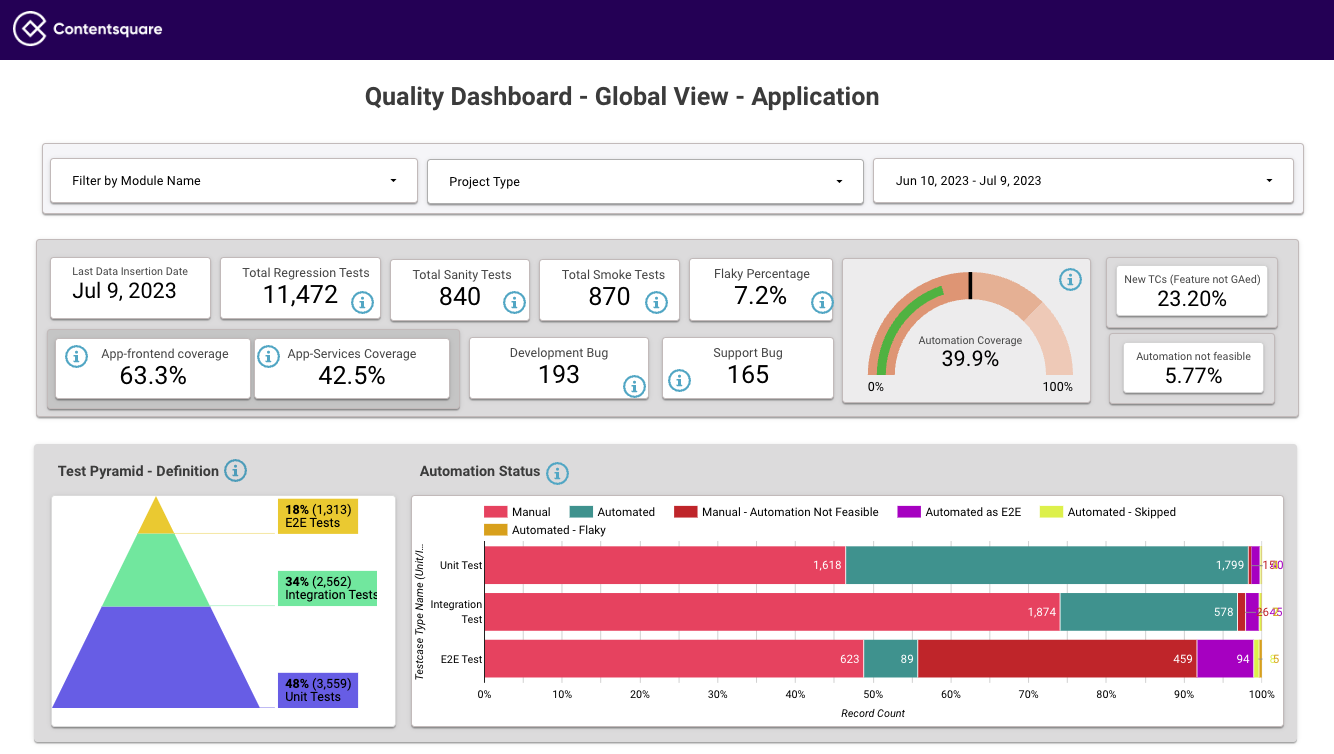

Realizing the need for a more streamlined approach, we set our sights on finding a solution that would not only address the mock creation challenge but also provide comprehensive visibility into our testing efforts. That’s when our Quality Team introduced the Quality Dashboard—a powerful tool designed to track and monitor the progress and outcomes of all quality-related activities, including our test pyramid.

Thanks to the Quality Dashboard, we can now visualize the expected pyramid of Contentsquare with 48% Unit Tests, 34% Integration Tests, and 18% E2E tests (classified tests on Test Rails). Here is an update on our ongoing journey to reshape our Test Pyramid:

While the results were promising, we recognized that there was still room for improvement. To ensure continuous support and guidance for the initiative, we established a specialized task force known as the Quality Cockpit. Drawing from the lessons learned during the struggles faced by the Quality Team, this team took a proactive approach to address the inefficiencies that hindered our progress. Building on the successful proof of concept by the Frontend core team, we assigned one or two developers from each Product Unit (Scrum team) to create an integration toolbox—a collection of readily available mocks for all dependencies of the square’s container component.

Equipped with the integration toolbox, our QEs now have efficient tools at their disposal to perform their jobs effectively and efficiently. This has alleviated the burden of creating mocks from scratch and allows them to focus more on executing tests and ensuring the stability and reliability of our modules.

Hard work truly pays off. Now our quality engineers, like the Aztecs, Mayans, and ancient Egyptians, all agree on the shape of the Pyramid!

Last words

The journey to reshape our Test Pyramid has not only been about implementing technical changes but also about gaining valuable insights and learnings along the way. We discovered that a collaborative and iterative approach is crucial for success. If we were to start over again, we would emphasize the importance of involving all stakeholders from the early stages, including developers, QEs, and product owners, to ensure a shared understanding of testing goals and priorities.

While the Test Pyramid provides a helpful framework for organizing and prioritizing tests, we learned that it is not a one-size-fits-all solution. Each project and organization may have unique considerations and trade-offs. It’s important to adapt and tailor the pyramid model to our specific needs, striking a balance between different types of tests based on the nature of the software, project constraints, and available resources.

In terms of the Quality Team’s higher-order goal, it goes beyond meeting the business needs. Our aim is to establish a culture of quality and continuous improvement, where testing becomes an integral part of the development process. We strive to empower developers and QEs with the necessary tools, knowledge, and support to deliver high-quality software that not only meets business requirements but also delights our users.

As our journey continues, we remain dedicated to refining our testing practices, embracing new technologies and methodologies, and staying adaptable in an ever-evolving landscape. Together, we aim to achieve a resilient and effective testing ecosystem that fosters innovation, minimizes risks, and ultimately delivers exceptional software experiences.

Stay tuned for more updates!

Bonus

Interested to know how we implemented our integration tests? We shared a repository with example code on GitHub.