Full-Text Search with Quickwit and ClickHouse in a Cluster-to-Cluster Context

ClickHouse has recently written a guide on how to use the full text search engine Quickwit with a machine-to-machine configuration. In this blog post, we’ll see how we handle, at Contentsquare, a cluster-to-cluster configuration.

Introduction

ClickHouse is a column-oriented database management system (DBMS) for online analytical processing of queries (OLAP). Quickwit is a very interesting and promising full text search engine. Initially thought for log management, it can also be used for more than that.

Recently, a developer guide has been introduced by ClickHouse in their official documentation on how to use a Full-text search with ClickHouse and Quickwit. It presents how to use Quickwit with ClickHouse in a simple machine-to-machine context.

However, since both tools can be used in a cluster mode, you might be wondering, “ok but how can I adapt this solution to my cluster and what kind of problems am I going to have?”. And you are right, from a machine-to-machine to a cluster-to-cluster setup it may introduce new complexity and issues. This post will show you how to address them.

Simple Cluster-to-Cluster Setup

This section presents how a query is processed when a ClickHouse cluster works with a Quickwit cluster. In this setup we have on one side, N ClickHouse shards with Distributed Tables. This engine allows distributed query processing on multiple servers, and thus parallelizing reads. On the other side we have M Quickwit nodes.

When the ClickHouse cluster receives a query requesting data from a distributed table, it calls Quickwit using the url function and the query is distributed among all shards. In turn, each participating shard is sending the same request to Quickwit. Finally, each shard receives the same answer from Quickwit. Given that Quickwit is not aware of the sharding key of the distributed ClickHouse table natively, each participating shard will receive N times more data than necessary.

Example of a query “Q”:

SELECT count(*)FROM eventsWhere event_id IN ( Select id as event_id FROMurl('http://quickwit-deployment/api/v1/gh-archive/search/stream?query=ClickHouse&fastField=id&outputFormat=ClickHouseRowBinary', RowBinary, 'id UInt64'))In this example, given a distributed table events, the query is counting the number of events that contain the word ClickHouse. In this case, each shard participating in the ClickHouse cluster will make a GET request to the Quickwit cluster. If your cluster contains N shards, you will send N queries to Quickwit. In addition, if the sharding key of the event table is event_id, then each ClickHouse shard will receive several useless event_ids that belong to all the other shards.

As highlighted in the previous example, a naive cluster-to-cluster setup between Quickwit and ClickHouse might introduce two sources of read amplification in the system. The first one is the consequence of each ClickHouse shard requesting the same query. Quickwit receives and runs N times the same query to return the same answer. That means we are sending N-1 more queries to Quickwit that are needed. Which also means that Quickwit is going to search and read (N-1/N) % of data that is irrelevant to the shard. The second read amplification is due to the ClickHouse sharding. Each shard receives the full answer K instead of only the subpart (K/N) needed (assuming a uniform distribution of sharding). Which implies that ClickHouse shards are receiving also N-1 times more data each. These issues consume a lot of useless network bandwidth and computation.

With Great Cluster Comes Great Difficulties

As we saw in the previous section, passing to a cluster-to-cluster setup raises two main read amplification problems.

- Read amplification 1: Is when N Quickwit nodes are receiving (almost) the same query, they are searching N times the same data.

- Read amplification 2: Is when a ClickHouse shard receives the full data instead of its proper subpart.

Several configurations can be used to work in a cluster-to-cluster mode. Each has its pros and cons.

Setup 1: naive configuration - Quickwit is not aware of the data sharding in ClickHouse. This is the setup we just saw with the two read amplification issues.

Setup 2: Quickwit is aware of the data sharding in ClickHouse using one Quickwit searcher per ClickHouse shard. Thus, each searcher can return only the subpart of the answer expected by the corresponding shard. This resolves a read amplification issue, but adding a new shard is harder because data has to be rebalanced on both ClickHouse and Quickwit.

Setup 3: Introduce a “bridge” in the middle of the pipeline. A bridge helps to solve read amplification issues in a more pleasant way. With a bridge, the data sharding between the two systems is decoupled. So, the sizing of the search infrastructure becomes independent of the ClickHouse cluster topology.

The Bridge Solution

The main purpose of the bridge is to reduce the read amplification caused by the topology of ClickHouse (the fact that we have multiple shards). It also caches responses from Quickwit in memory to speed up subsequent queries using a cache. In this article, the LRU cache policy has been chosen for a question of simplicity.

The bridge is agnostic to the query and the answer. All it has to know is who is asking it and if the answer is in the cache - even partially - or not. The bridge is also aware of the sharding key in the ClickHouse cluster and uses this information to filter the data that is irrelevant for the calling shard.

Data flow pipeline: different use cases with the bridge

In this section, we present the bridge behavior in three different scenarios to highlight its advantage in the face of read amplification issues.

Scenario 1: The unknown query (Have you met the query ?)

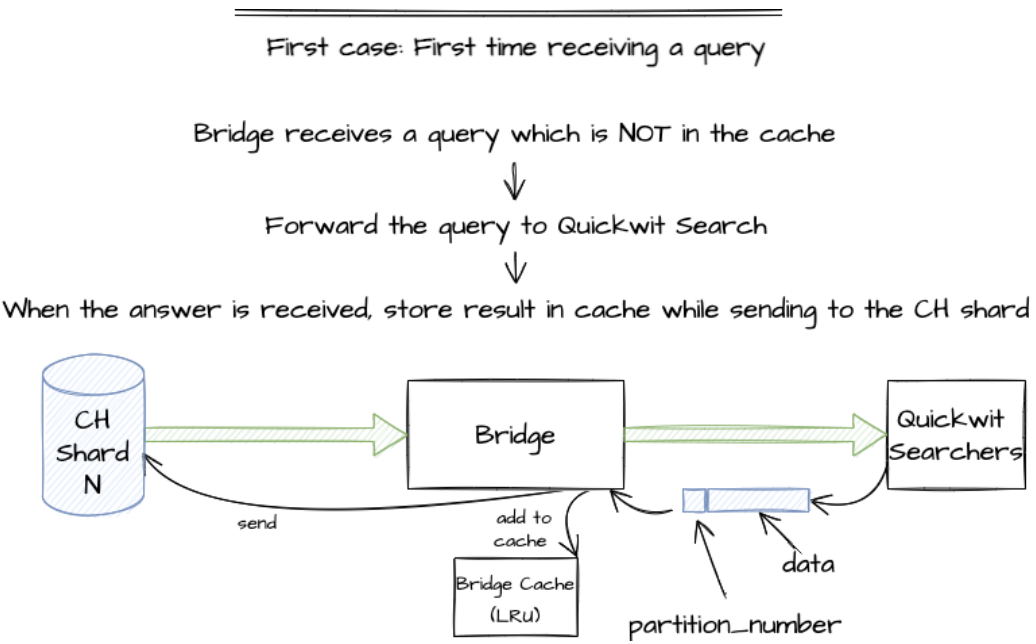

The ClickHouse shard N sends a query. The query doesn’t exist in the cache yet. We start by adding an empty slot in the cache to block the next coming one with this identical query, then forward the query to Quickwit searchers. Answer is then copied in the empty cache slot and streamed at the same time to the ClickHouse shard. Schema 1 illustrates this data flow and how the bridge works in this scenario.

Scenario 2: The repeat (The return of the query)

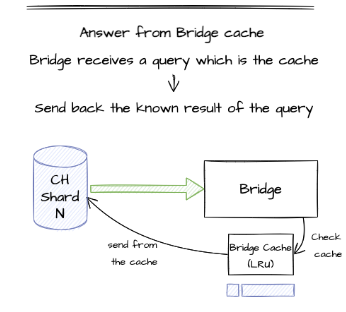

After scenario 1, a (different) ClickHouse shard sends the same query again to the bridge. Quickwit will not be requested and the answer is returned directly from the cache.

Scenario 3: Queries concurrency (Queries are coming)

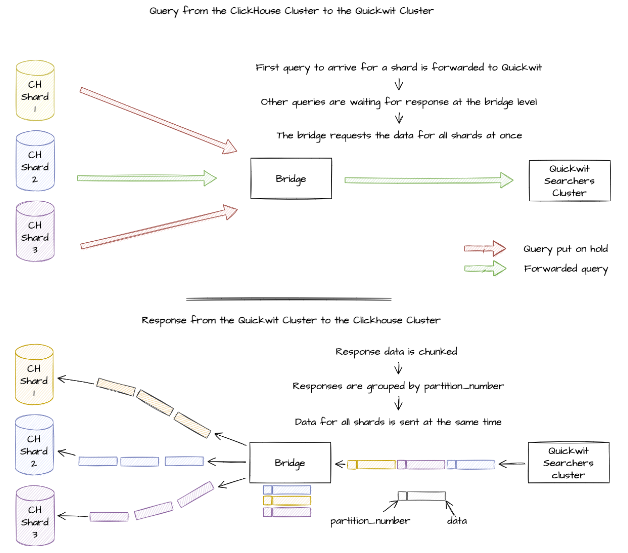

Here, several shards are requesting the same query. Remember, each shard only wants a part of the answer and Quickwit searchers return the full answer. This is represented in the upper part of Schema 3; several shards send the same request to the bridge.

When one shard sends a query, the bridge will block all other ones for the same query while forwarding the first one to Quickwit Search. It avoids flooding the Quickwit cluster with the same query. Once the answer comes back, the data is sent to all shards at the same time. In addition, instead of sending to everyone the full answer that they will have to deserialize and filter, the bridge split the answer knowing who is requesting which subpart of the answer. This is represented by the bottom part of the Schema 3.

From these three scenarios we can see that having a bridge with a cache allows to remove the read amplification problems that appeared when passing from a machine-to-machine to a cluster-to-cluster setup. ClickHouse shards don’t need to deserialize and filter the full answer anymore. In addition, Quickwit Searchers are not wasting time any more by returning the same answer again and again.

Conclusion

We presented a way to remove read amplification issues when passing from a machine-to-machine communication to a cluster-to-cluster one. These read amplifications add a lot of wasted network bandwidth usage, but by adding a “bridge” machine/cluster that manages requests and answers, we can easily remove these issues. The bridge does not even need to understand the request or the answer; thus, this solution can be adapted to other cluster-to-cluster configurations that are facing read amplification issues.

The cluster issue is removed by absorbing all requests from ClickHouse shards and only sending one request and the sharding issue is solved by letting the bridge dispatch the answer. In addition, a cache system has been added to limit the number of requests sent to Quickwit and the bandwidth usage. You have now a better idea of how to exploit the best of ClickHouse and Quickwit without drawbacks. Better, Faster, Stronger Together.