Full page screenshots on the server side

Full web page screenshots are frequently used in Contentsquare’s Zoning module to share analyses with key collaborators as PDFs or PNGs. Implementing this feature was challenging and required multiple iterations that we will detail below.

Today, different open source solutions already exist:

- Puppeteer using the page.screenshot() API

- Playwright using a similar page.screenshot() API

- Pico: A client-side JavaScript package

- full-page-screen-capture-chrome-extension: A simple Google Chrome extension

Our solution draws inspiration from these projects but none could be used out of the box because we needed to handle edge cases such as fixed elements in the page, very long pages and pages that contain scrollable elements.

Client-side vs. Server-side

We chose a server-side solution, which provides many advantages:

- No client-side processing: all the processing will be done on server side where we do not rely on the user’s browser performance.

- Better user experience: to generate good web page screenshots, we may need to modify the content of the web page and execute scroll actions while processing. In a client-side solution, a new window may be opened and the user will be blocked to do other actions until the screenshot is generated. Using a server-side solution with an asynchronous request will provide a better user experience for the user, as he will still be able to navigate or request new screenshots while the processing is done on the server.

- Server-side browser pool: the client can send multiple screenshot requests at the same time, which we can manage in parallel on the server side with a pool of browser instances.

- Long processing: some screenshots might take a while to generate and require managing long tasks launched from the client. This can be done by using real-time communication to notify the client once the task is done or using a database polling mechanism to get the status of the task and proceed to download the screenshot once it is generated.

Our Puppeteer-based solution

Puppeteer is a Node.js library which provides a high-level API to control Chrome or Chromium with the DevTools Protocol.

We based our solution on Puppeteer because it produced perfect results on different edge cases from our client sites. To render a client site, we use a static web application that is capable of creating zones on the page and overlaying metrics on the page elements.

Our current solution went through multiple iterations:

Version 1: Puppeteer full web page API

Here is how to use the basic Puppeteer API to take a full web page screenshot:

const url = "https://www.contentsquare.com/";let browser = await puppeteer.launch({ headless: true });let page = await browser.newPage();await page.goto(url, { waitUntil: "networkidle0", timeout: 60000 });return page.screenshot({ fullPage: true});We quickly faced some limitations with this method:

- Long web pages: long web pages over 16k pixels in height cannot be processed well and produce duplicated content in the output due to a limitation from the browser.

- Lazy loading content: some pages need to be fully scrolled through until all the page content and images are loaded.

Version 2: Scroll and screenshot

To resolve the issues of long web pages and lazy loading content, we implemented a “scroll and screenshot” mechanism. The algorithm relies on these steps to generate a full web page screenshot:

- Scroll until all the content is loaded and we are be able to get an accurate page height.

async function getIframeComputedHeight(frame: ElementHandle): Promise < number > { return frame.evaluate(async () => { return new Promise((resolve, reject) => { let totalHeight = 0; const distance = 500; const startTime = new Date().getTime(); const timer = setInterval(() => { const scrollHeight = document.body.scrollHeight; if (scrollHeight > 0) { window.scrollBy(0, distance); totalHeight += distance; if (totalHeight >= scrollHeight) { clearInterval(timer); window.scrollTo(0, 0); resolve(scrollHeight); } } if (new Date().getTime() - startTime > 60000) { clearInterval(timer); reject(`Scroll timeout in iframe`); } }, 500); }); }) as Promise < number > ;}- Using this calculated height, find out how many screenshots we need to take (10 maximum).

const scrollHeight = Math.min( computedDocumentHeight, Math.max(VIEWPORT_OPTIONS.height, Math.round(computedDocumentHeight / 10)),);- Set the browser viewport height to the screenshot height.

await page.setViewport({ height: scrollHeight, width: viewport.width });- Iterate through the screenshots. On each iteration, scroll to a new vertical position starting at position 0, in

scrollHeightincrements.

let yPos = 0, screenshots = [];while (!lastIteration) { lastIteration = yPos + scrollHeight >= computedDocumentHeight; const screenshot = await page.screenshot(); screenshots.push({ input: await sharp(screenshot).toBuffer(), left: 0, top: yPos === 0 ? 0 : lastIteration ? computedDocumentHeight - scrollHeight : yPos, }); yPos += scrollHeight;}- Merge the generated screenshots using the Sharp library.

return sharp({ create: { width: viewport.width, height: computedDocumentHeight, channels: 3, background: { r: 255, g: 255, b: 255 }, },}).composite(screenshots);

Version 3: Scroll and screenshot + header and footer cropping

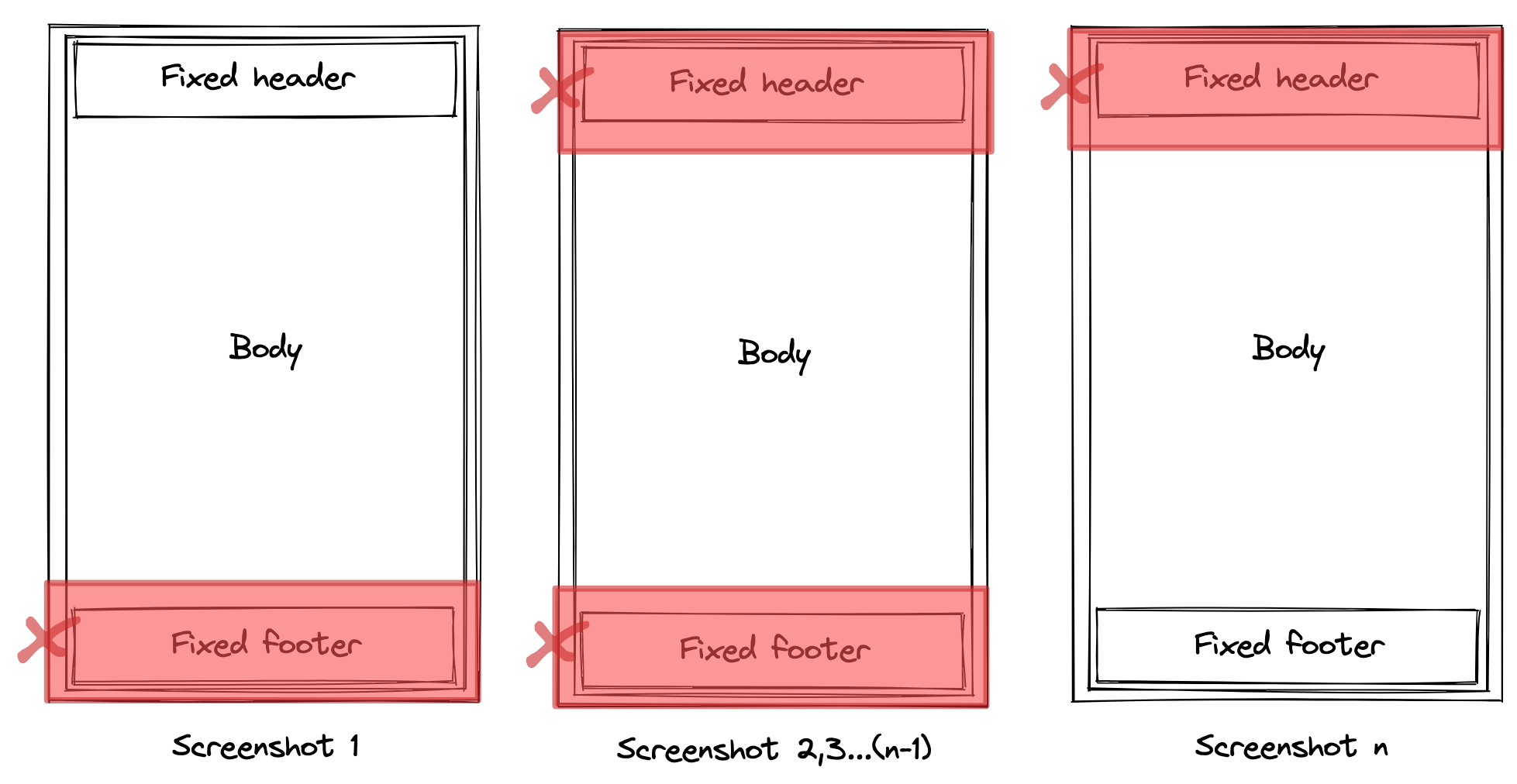

Using the scroll and screenshot mechanism, we noticed that fixed elements were appearing on every screenshot taken, which resulted in an image containing duplicated content.

To resolve this, we added custom logic that crops the header and footer from the screenshots generated on all iterations except the first and last one, and then offsets the next scrollHeight accordingly. We chose 250px as the maximum header and footer heights.

Version 4 (final): Scroll and screenshot + fixed elements hiding

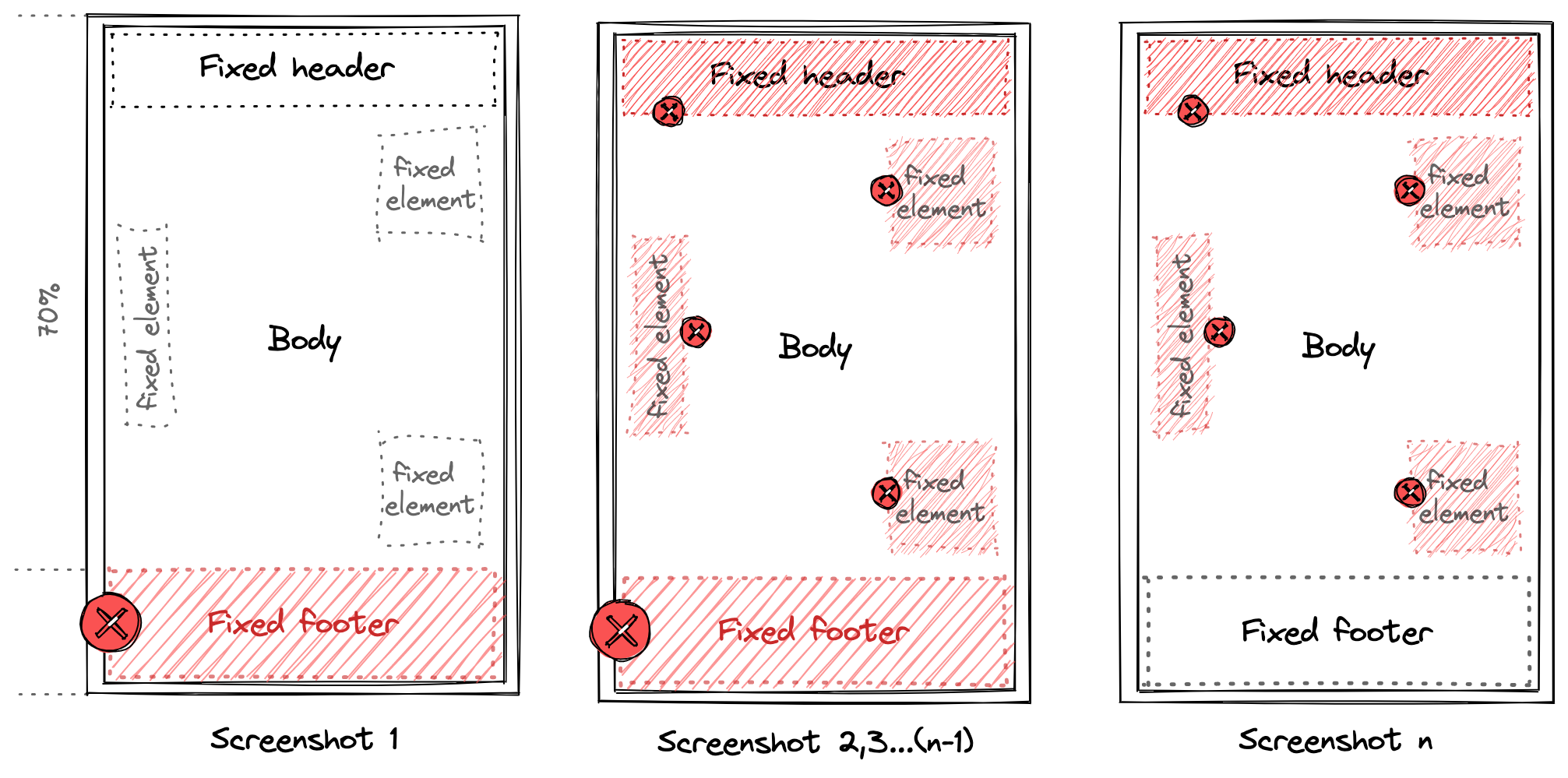

We noticed that version 3 was very resource-intensive and much slower as we needed more processing to crop each screenshot, in addition to having more iterations than the initial solution. We would also often find fixed elements that were not included in the header or footer.

To resolve this issue, we decided to selectively hide fixed elements thanks to the ability to edit the DOM of a page using page.evaluate(). The algorithm is as follows:

- Before starting the scroll and screenshot mechanism, identify the fixed elements on the page, considering that footer elements have a top position greater than 70% of the viewport height.

async function getFixedElements(frame: ElementHandle): Promise < FixedElement[] > { return frame.evaluate(() => { const fixedElements: FixedElement[] = []; const allElements = document.querySelectorAll('*'); for (let i = 0; i < allElements.length; i++) { const elementStyle = getComputedStyle(allElements[i]); if (elementStyle.position === 'fixed' || elementStyle.position === 'sticky') { const { top } = allElements[i].getBoundingClientRect(); fixedElements.push({ elementIndex: i, defaultDisplay: elementStyle.display, topPosition: top, }); } } return fixedElements; });}const fixedElements = await getFixedElements(frame);const footerFixedElements = fixedElements.filter(element => element.topPosition >= viewport.height * 0.7);- On the first iteration, hide the footer elements before taking the screenshot.

async function toggleFixedElementsDisplay( frame: ElementHandle, fixedElements: FixedElement[], showFixedElements: boolean,): Promise < void > { return frame.evaluate( (_, { fixedElements, showFixedElements }) => { const allElements = document.querySelectorAll('*'); fixedElements.forEach((fixedElement: FixedElement) => { (allElements[fixedElement.elementIndex] as HTMLElement).setAttribute( 'style', `display: ${showFixedElements ? fixedElement.defaultDisplay : 'none !important'}`, ); }); return; }, ({ fixedElements, showFixedElements }) );}await toggleFixedElementsDisplay(frame, footerFixedElements, false);- On the second iteration, hide all the fixed elements on the page.

await toggleFixedElementsDisplay(frame, fixedElements, false);- On the last iteration, show only the footer fixed elements before taking the screenshot.

await toggleFixedElementsDisplay(frame, footerFixedElements, true);

Adding a Puppeteer browser pool

After intensive testing of our solution, we noticed that our server sometimes crashed due to too many incoming requests. To remedy this, we implemented a pooling system using the generic-pool library which provides a generic resource pool with a Promise-based API.

With generic-pool, we were able to manage multiple instances of a Puppeteer browser in order to use one available instance for each incoming request. Our implementation looks like this:

const args = ['--no-sandbox', '--disable-setuid-sandbox'], MIN_CHROME_POOL = 2, MAX_CHROME_POOL = 4, ACQUIRE_TIMEOUT = 120000;async function createPuppeteerPool() { return genericPool.createPool({ create() { return puppeteer.launch({ args }); }, validate(browser) { return Promise.race([ new Promise(res => setTimeout(() => res(false), 1500)), browser .version() .then(_ => true) .catch(_ => false), ]); }, destroy(browser) { return browser.close(); }, }, { min: MIN_CHROME_POOL, max: MAX_CHROME_POOL, testOnBorrow: true, acquireTimeoutMillis: ACQUIRE_TIMEOUT, autostart: false, })}Once the Puppeteer pool is created, the acquire function can be used for each incoming request.

const browser = await browserPool.acquire();const page = await browser.newPage();Using database polling

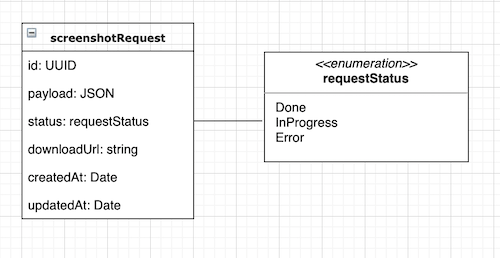

Further testing revealed that some requests with long pages were exceeding the server timeout. That is why we implemented a database polling mechanism where we send an acknowledgement response on a request containing a unique identifier to the client to be used for the polling mechanism. Each identifier is linked to a row in the requests table that contains the status of each request. Once the full page screenshot is done, we do an update on the status of the request so the client is able to advance to the download step of the generated file.

Wrapping up

As we have seen, after many improvements, we were able to create an efficient solution to take a full page screenshot server-side which handles different edge cases such as fixed elements and long pages. Further improvements made it production-ready such as adding generic pool to improve server performance and using database polling to handle long tasks.

The next step for this project is being able to handle scrollable elements on the page. They could be handled by overlaying new images on top of the generated screenshots. Each of those images would only contain the scrollable element, captured individually with an element.screenshot() call using Puppeteer. We also aim to improve the performance of our algorithm by splitting long tasks in multiple threads that could screenshot parts of the same page in parallel. Stay tuned!