We Love Speed 2021 Conference Recap

Last week the Contentsquare team was at the WeLoveSpeed conference in Lyon, France. It is a conference specifically about web performance, with speakers from the major French e-commerce websites as well as experts and consultants. As the conference was only in French, we thought it would interest our international audience to learn more about this field.

We have all been there

Most of the companies shared a similar story: none of them really monitored their performance until it was too late. I am pretty sure many people in the audience could relate. It is only when you start to see a drop in your SEO, revenue and customer satisfaction that you realize that you have been neglecting performance for too long. The wake up call is painful, and you have to urgently put a taskforce in place to fix months or years of code.

But you most probably do not have any web performance experts in house, so you turn to external specialists like Google or Jean-Pierre Vincent. They know their job and quickly give you a list of 40 items you should fix. Some items in this list will improve performance on mobile, others on desktop. Some will only work on the homepage, while others are for the checkout page.

That is a lot to fix, and you do not have much time, so you need to prioritize. Everybody in your team has a different opinion on what should be fixed first. Without data, they are just opinions; so you check your real production data, to identify who your media, user is. You are very surprised to discover it is not someone browsing on the latest Mac with a fiber optic connection like you, but people on a 5 year old phone, with a very slow connection and high latency.

Now that you have prioritized your list by impact, you start working on items one by one. Each new deployment increases your performance, getting you closer to the end of the tunnel. After weeks (or even months) of work, you are about to reach the load time you set yourself as a target. Then you realize that your refactoring that increases Chrome performance by 17% also decreased Safari performance by 25%. You start to learn all the weird hacks and tips for each browser, and keep fixing.

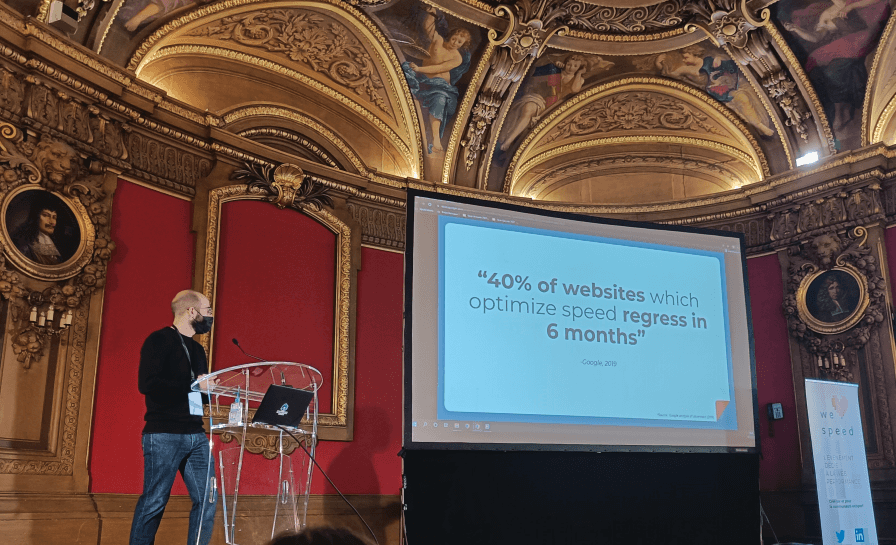

You finally fix your initial production issue, but you are afraid that with the speed at which your codebase is growing, all your optimizations will be outdated in 6 months.

Never again!

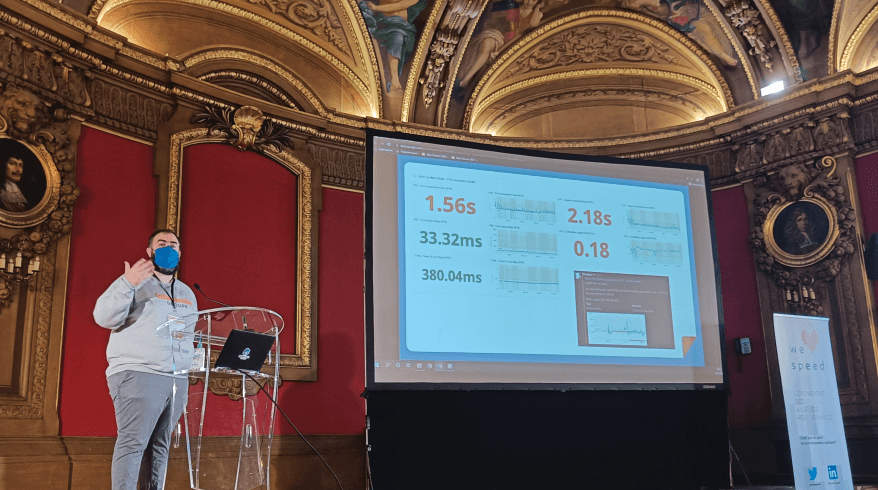

What you need is not a one time fix, but a long term solution. You take a deep breath and say to yourself “Never again!”. You plug Datadog and Lighthouse together, or simply use Dareboost. Now you can see, before your customers, if something is going wrong. You also add a quick fix on your CI: any PR that increases the bundle size by more than 10% is automatically blocked. This is a simple thing, but it will prevent code bloat.

You train your teams about the Core Web Vitals: you explain what they are and why they need to care about them. You explain differently to a designer than to a product owner. They might not care about them for the same reasons, or might not have the same impact on the score, but at least now the whole team as an objective measurement of the performance of their code.

You start to notice your designers asking themselves “what should be displayed while the map is loading?”, and your developers keep track of their TTI before each release. You hear performance talks around the coffee machine, and before you know it your developers are giving talks at We Love Speed. But how could you go even further?

The future is made of micro-frontends

Some companies like Leroy-Merlin and ManoMano even went further. Unrelated to web performance specifically, they were frustrated with their monolith architecture: it was slowing their release cycles, creating bottlenecks where each team had to wait for the code of other teams to be merged. The overall complexity of the code was out of control and even the deployment process was getting more and more frustrating.

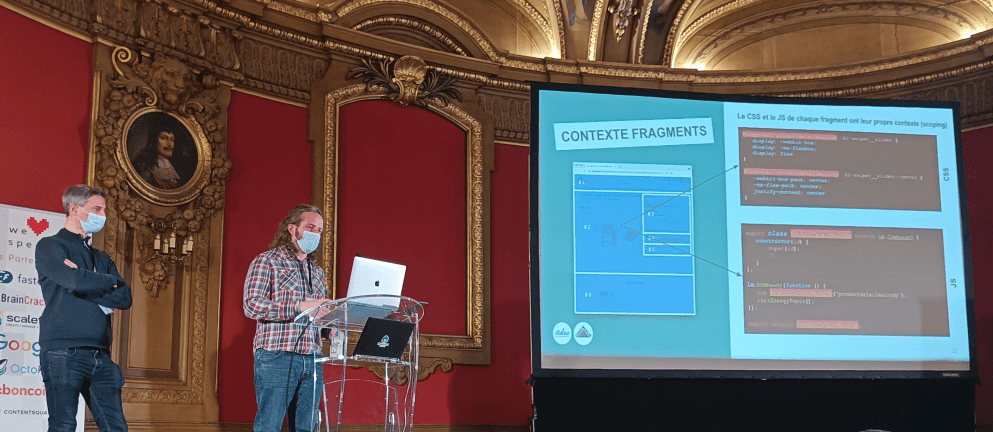

So they split each page in “fragments”. Each fragment can be thought as a UI

component of the page, and a full page might be made of 30 or so fragments. For

example, there would be a fragment for the navigation bar, one for the add to cart button or one for the similar items carousel. Each fragment will be

owned by a dedicated team of about 5 people, including developers, product owner

and designer. If you want to know more about micro-frontends, we documented why

and how we migrated to such an architecture ourselves.

When the page is requested by the client, the backend would construct it by replacing placeholders in its template with the latest code of each fragment and serve that to the client. Any personalized bit (number of items in the cart, username or availability in stock) would be fetched dynamically by the frontend. That way about 80% of the page is rendered on the server and streamed to the browser, and the last 20% of interactivity are lazy loaded.

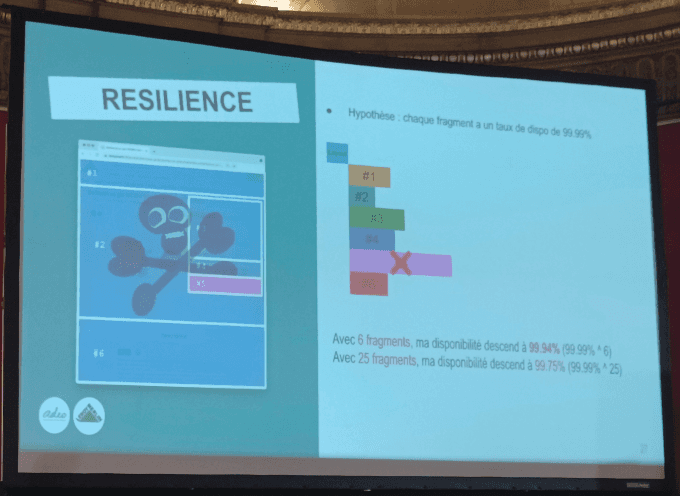

This had a deep positive impact on their Time To Market, their resilience and of course their performance. Because each fragment is responsible for doing one thing and one thing only, they can be released independently, without having to wait for other team releases. Splitting a complex problem into smaller problems also reduced the code complexity and cognitive load which increased release velocity.

Having this separation per fragment had a lot of beneficial side effects they

did not anticipate. For example, they could define different priorities to each

fragment. Fragments marked as secondary (like the similar items carousel),

could have a specific treatment. They could be disabled in case of a high

traffic peak, lazy-loaded until they got into the viewport, or simply having

their errors ignored.

But the most impressive outcome of such a split to me was that they mostly ditched their JavaScript frameworks. Because each fragment does one simple thing, they did not really need a full-fledged JavaScript framework and could do with Vanilla JS in 95% of their widgets. Relying on standard JavaScript events, APIs and DOM manipulation, they could drastically cut on their JavaScript size and achieve very fast load times.

The fastest JavaScript is no JavaScript

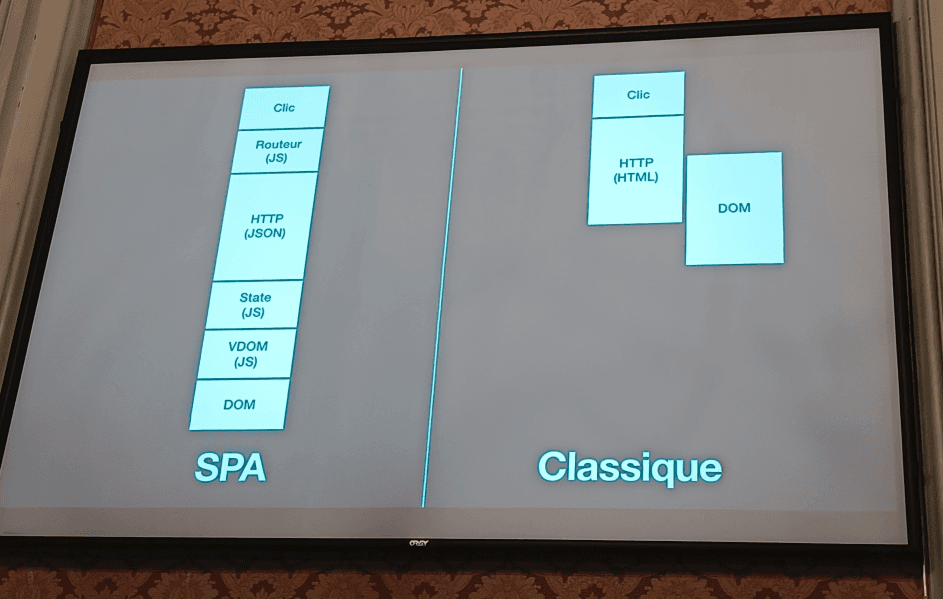

Which ties well into something many of the experts repeated during the day: JavaScript will never make your website faster. At best, it will offset the cost of downloading and executing itself.

A common advice given for developing the fastest fragments was to use a no-JavaScript approach. Just like for integrating a design you would start with the mobile version first, and then improve it for desktop. For creating a fragment, you would start with the standard no-JavaScript version first, and then add JavaScript as part of a lazy-loaded progressive enhancement.

In today’s world there are very few people that manually disable JavaScript on their browsers, but there is an awful lot of people browsing on slow connections (mobile or a badly configured corporate proxy) that will take up to 10s until they can download your JavaScript. For those people, you need to provide a stripped down version of your fragment that loads quickly. And only when the JS is loaded can you add all the bells and whistles.

The underlying stack of the web, made of HTTP, HTML and CSS is here to stay. It will only get better, with improved support in all browsers, year after year. If you want your web performance to be future proof, you have to build using those standard blocks.

With Server-Side Rendering, you send the full HTML to the browser directly which will be rendered while it is downloaded (no need for costly JS initialization, JSON parsing and VDOM creation). CSS has extended touch and scroll snap support for slideshows. Sure, the default HTML date picker won’t be as fancy as a JavaScript one, but it also won’t require to download and execute heavy date formatting libraries. Start with the standard, and lazy load the fancy. Use progressive enhancement. Always bet on standards.

Conclusion

Web performance is no longer a niche field for engineers obsessed with shaving off a few milliseconds here and there. It now has a proven and direct impact on SEO and revenue, as well as objective ways of being quantified. Conferences like We Love Speed clearly show that web performance best practices are becoming as ubiquitous as code reviews or automated deployments in quality oriented teams.

Performance optimisation is not a sprint, it is a marathon. You have to constantly monitor your metrics and keeping up to date with the latest browser features. People that master the underlying blocks of any web page (HTTP/HTML/CSS) will have an incredible edge over those that specialized on specific JavaScript frameworks. Invest in markup specialists in your teams.

Hope to see you next year and discuss your own findings!